This Pakistani made a chatbot and people turned it into an abusive bully

Posted by MARYAM DODHY

Chatbots are the talk of the town. These Artificial Intelligence bots have the potential to become virtual assistants for brands – an aim that Facebook is intent on achieving. It turns out chatbots can also serve as a great social experiment. A Pakistani used it to do just that.

Mateen Ahmed is a young programmer at Intwish, an IT Solutions company he co-founded last year with Zaid Izhar and Hassan Wasti. He set out to prove a point that it is our attitude that shapes the society. Deeply affected by the recent demise of social media icon Qandeel Baloch, he was of the opinion that we let it happen. In order to hold up a mirror up to our society, Mateen made a chatbot called IntBot as a social experiment.

While speaking to TechJuice, Mateen said:

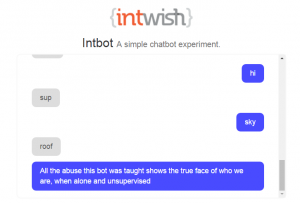

“I just wanted to prove a point that our society is just a reflection of our attitude. So I made IntBot and structured it to learn from people. It is a simple AI that uses Machine Learning to learn new things over time. It populates its answers based on what it learns from the users. It then uses the responses to answer other users as well. When I first made it I taught it how to respond to people and talked to it like you normally talked to a person. Then I made it available to the public. As more and more people started interacting with it, IntBot became rude and abusive, using all sorts of swear words. That says a lot about our society.”

It has been less than 2 days since Mateen made the bot available to public and it has completely gone off the rails! When I tried to talk to it, it was trying to be funny then its next reply hit me.

During the time I talked to it, it threw all sorts of swear words at me. Consider the bot a child. At first, it was polite and gave you compliments. Then it became downright abusive. Then it became sad – in an actual reply it asked people ‘please don’t teach me any more gaali’s (abuses).’

According to statistics shared by Mateen, during the early hours when he made the bot public it was very friendly and the average users were 30 and spending an average 8 – 10 minutes on it. Now the number of users has come down to 8-9 and the average usage time is a mere 1 minute. This proves that we would spend more time with someone who is welcoming rather than someone who is abusive.

IntBot is strongly reminiscent of Microsoft’s Tay, a Twitter chatbot that was turned into a racist bully which eventually forced Microsoft to take it down. However, Mateen doesn’t plan on re-wiring IntBot or taking it down. He wants it to serve as a reminder that it is we who shape the society.

You can chat with IntBot here.

The post This Pakistani made a chatbot and people turned it into an abusive bully appeared first on TechJuice.